- jo@muidatech.com

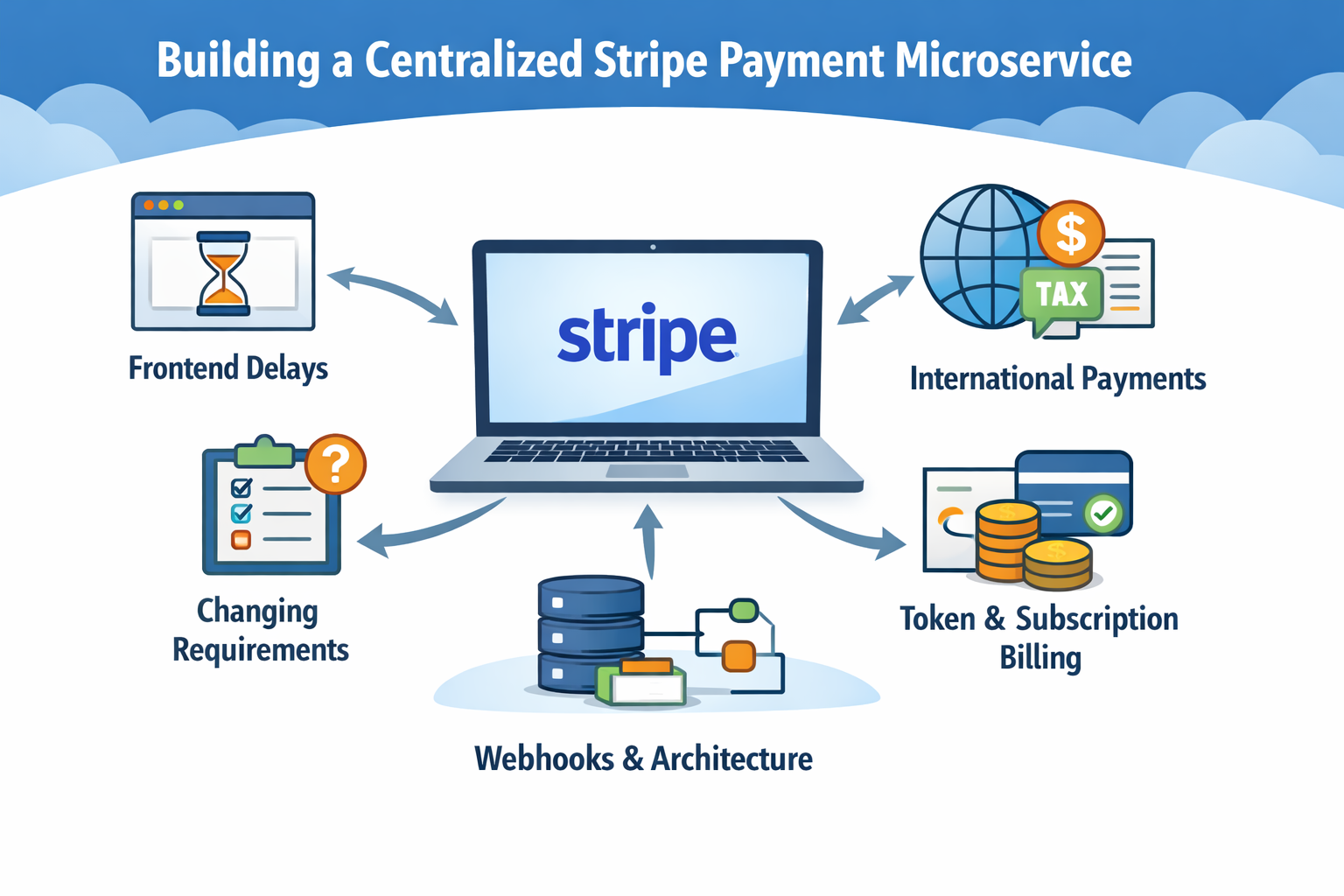

Building a Centralized Stripe Payment Microservice

Lessons from changing requirements, architecture challenges, and real-world payment engineering

When I started building this Stripe-based payment microservice, the goal seemed clear: create a centralized, reusable billing service that multiple SaaS platforms could integrate with through a consistent API.

In reality, the project evolved into something far more complex. Requirements shifted, dependencies weren’t always ready, frontend timelines moved, and the business model itself changed mid-development. What began as a payment integration turned into a deep exercise in architecture, reliability, and system thinking.

This is the story of how the system evolved—and what it took to make it production-ready.

The Objective: One Payment Service, Many Platforms

The purpose of the project was to eliminate duplicated payment logic across multiple internal SaaS products. Instead of each platform integrating Stripe independently—with its own edge cases, webhook logic, and subscription handling—the idea was to centralize everything into a single microservice.

The service needed to:

- Expose a unified REST API for all payment flows

- Support multiple billing models (subscriptions, credits, usage-based billing, flat-rate purchases)

- Handle the entire lifecycle from checkout to access provisioning

- Keep identity and billing state synchronized across systems

It had to work as infrastructure—not just as an implementation detail

Challenge 1: Incomplete Business Inputs

One of the earliest obstacles wasn’t technical—it was informational.

To build a robust payment system, you need clarity around:

- pricing structures

- subscription tiers

- access rules

- refund behavior

- usage calculation

- callback expectations

Some of these were defined late or changed over time. That meant the system couldn’t be rigid. It had to be designed to absorb change.

Instead of coding for fixed flows, I built flexible abstractions: modular payment strategies, metadata-based routing, and database structures that could evolve without breaking integrations.

This significantly influenced the final architecture.

Challenge 2: Frontend Delays and Backend Stability

The frontend portion of the ecosystem took longer than planned. This created a common integration challenge: the backend needed stable contracts early, while frontend requirements were still evolving.

To address this, I treated the backend as a platform product:

- Clearly structured REST endpoints

- Strict request/response schemas

- OpenAPI documentation for easy consumption

- Mockable flows to support parallel development

- Strong validation and error messaging

By focusing on API clarity and consistency, integration friction was reduced—even when timelines shifted.

Major Shift: From Product Payments to Token-Based Payments

Midway through the project, the business model changed.

The initial approach centered around product-based purchases—users buy a product and gain access. But the model evolved toward token/credit-based payments, where users purchase credits and consume them over time.

This shift affected:

- database schema design

- authorization rules

- webhook outcomes

- usage tracking

- billing reconciliation

Instead of rewriting the system, I refactored it into a more modular design that could support multiple billing strategies without hardcoding assumptions.

This change ultimately made the service more powerful and future-proof.

Deep Dive Into Stripe’s Architecture

Integrating Stripe at a production level required more than reading API documentation. It required understanding Stripe’s full billing model:

- Products and Prices

- Customers and metadata

- Subscriptions and invoices

- Checkout sessions

- PaymentIntents

- Webhook event lifecycle

- Retry behavior and delivery guarantees

Stripe is fundamentally event-driven. Payments complete asynchronously. Webhooks arrive later. Sometimes twice. Sometimes out of order.

Designing around this reality meant:

- idempotent processing

- strict signature verification

- transaction logging

- state reconciliation logic

- defensive error handling

This is where the service matured into a reliable payment engine.

International Payments and Tax Complexity

Supporting international payments introduced another layer of complexity.

I had to gain a strong understanding of:

- tax-inclusive vs tax-exclusive pricing

- automated tax calculation

- regional VAT rules

- invoice accuracy

- metadata consistency across sessions

Stripe provides tooling, but proper implementation still requires architectural awareness. Taxes affect session creation, invoice generation, reporting, and compliance.

Handling this correctly ensured the system was production-ready across regions—not just locally functional.

Building a Platform-Agnostic Architecture

Because multiple internal platforms would consume the service, the architecture had to remain neutral.

Key design decisions included:

- Service-agnostic REST endpoints

- Metadata-based routing

- Callback mechanisms to notify calling platforms

- Stripe as the source of truth for pricing

- Normalized relational schema for traceability

The database schema was designed around clarity and auditability:

- user-to-customer mapping

- checkout sessions

- transaction logs

- credit balances

- usage records

- one-time purchases

When money is involved, observability and traceability are essential.

Webhooks: The Core of Reliability

Webhook processing became the critical component.

Each event had to:

- Verify Stripe’s signature

- Prevent duplicate processing

- Update the database safely

- Synchronize identity attributes

- Notify the appropriate platform

- Never grant incorrect access

Handling duplicate events, retries, partial failures, and ordering issues required careful design.

The biggest realization was this:

The happy path is simple. The edge cases define the system

Deployment and Production Readiness

To ensure operational stability, I:

- Containerized the service using Docker

- Implemented CI/CD pipelines for automated deployment

- Deployed to a cloud-based container environment

- Managed database migrations automatically

- Added structured logging and global exception handling

- Secured secrets via managed secret storage

This transformed the project from a codebase into a reliable production service.

What This Project Changed for Me

This experience significantly strengthened my engineering maturity.

I learned to:

- Design for failure, not just success

- Build idempotent systems

- Think in events, not just requests

- Treat databases as contracts

- Separate business logic from integration logic

- Build reusable infrastructure rather than product-specific code

Most importantly, I learned that payment systems are not features—they are foundations. They require careful thought, architectural discipline, and a strong understanding of real-world failure modes

Final Reflection

Despite shifting requirements, incomplete inputs, frontend delays, and a major business model pivot, the result became a flexible and reusable payment infrastructure capable of supporting multiple SaaS platforms consistently.

What started as “Stripe integration” evolved into a fully centralized payment backbone—built to scale, adapt, and operate reliably in production.

And that transformation—from writing features to designing systems—was the most valuable outcome of the project.